Large Language Models (LLMs), such as OpenAI GPT-4, are state-of-the-art deep learning models based on transformer architecture. These models are pre-trained on vast amounts of internet-scale data to predict the next token in a sequence. LLMs are already revolutionizing various business solutions due to their ability to generate human-like text at scale.

However, despite their groundbreaking potential, LLMs present two major challenges due to their pre-trained nature. Firstly, they often struggle to provide coherent and accurate responses on topics they have not been trained on, especially content that is not publicly available. Secondly, LLMs are prone to producing unreliable responses, often referred to as “hallucinations,” where they generate confidently incorrect information.

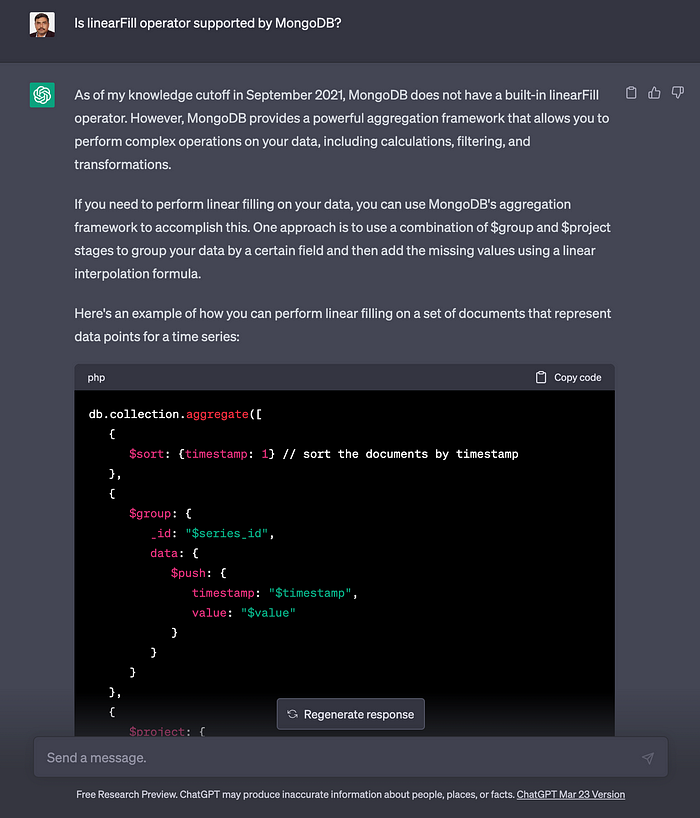

For example ChatGPT as of this writing is not able to provide a good answer to a question about “linearFill” operator available in MongoDB aggregation pipeline.

Although it is possible to train a model that is specific to your domain and content, such as in the situation described above, it can be quite costly to do so. You can overcome the limitations of Large Language Models (LLMs) using one of these approaches:

- Fine-tuning & Transfer Learning: Instead of training the model from scratch, transfer learning allows us to take an already pre-trained model and fine-tune it on a specific task or dataset. LLMs can be fine-tuned on specific tasks or domains by training them on smaller, more specific datasets. This helps the model to specialize in a particular area and produce more accurate responses in that area.

- In-context Learning & Prompt engineering: Knowledge graphs can be used to provide relevant context to the LLMs, allowing them to better understand the relationship between different pieces of information. Prompt engineering can be utilized to incorporate this context into the LLMs, ensuring that they produce more accurate and relevant responses.

- Hybrid Approaches: Finally, a hybrid approach that combines fine-tuning, knowledge graphs and automated validation of responses.

While Fine-tuning a model is cost-effective compared to training your own model from scratch, Fine-tuning can be computationally expensive if the model is large and the training dataset is large. And Fine-tuning can lead to overfitting if the model is trained on a limited dataset, resulting in poor performance on unseen data.

In-context learning is a fascinating aspect of LLMs that researchers are still exploring. It involves the model being able to quickly adapt to a new task or dataset by utilizing only a few task-specific inputs, typically fewer than ten. By leveraging this phenomenon, along with prompt engineering techniques, we can effectively address our problem. This approach allows the model to quickly understand the task-specific context and generate accurate responses with minimal training data.

The remainder of this blog delves into the solution design and the tools to achieve this as well as provides a working notebook that you can use for your own exploration.

Understanding key concepts

Before we dive into the Q&A solution approach, let’s understand a few key concepts.

- In-context learning

- Retrieval-Augmented Generation (RAG) for Knowledge-Intensive NLP Tasks

- Vector databases

- Prompt engineering

In-context learning is a fascinating phenomenon that has emerged in Large Language Models (LLMs). It allows LLMs to perform tasks by conditioning on domain-specific labeled data (zero-shot), without optimizing any parameters. This behavior is achieved through a two-step architecture, where a retriever finds the appropriate document, and an extractor finds the answers in the retrieved document. One popular approach to implementing this architecture is Retrieval-Augmented Generation (RAG), which endows pre-trained, parametric-memory generation models with a non-parametric memory through a general-purpose fine-tuning approach.

Before asking any questions to an LLM, relevant information is retrieved based on the user query and supplied to the LLM using Prompt Engineering. This approach allows for fast and accurate retrieval of data based on their semantic or contextual meaning. To achieve this, a vector database is utilized to store data as high-dimensional vectors, which are mathematical representations of features or attributes. The vectors are generated by applying a transformation or embedding function to the raw data. The main advantage of a vector database is that it enables querying based on similarity or vector distance, allowing for the retrieval of the most relevant data based on their semantic or contextual meaning.

Using the in-context learning behavior of LLMs, combined with RAG workflow and Prompt Engineering, we can build a powerful Q&A system on your own and private content.

Solution design

Coming back to our original problem of how we can get the right answer from OpenAI GPT-4 for the user question

“Is linearFill operator supported by MongoDB?”

The information required is available in the “Practical MongoDB Aggregations Book” available here: https://www.practical-mongodb-aggregations.com/

The solution design below outlines how we get the content available in the above book and use it for in-context training and Q&A with GPT-4.

The solution design comprises several steps, which are as follows:

- Extracting content from the pages of the book.

- Splitting the content into smaller, more manageable chunks of data.

- Converting the chunks of data into vector embeddings using OpenAI Embeddings model.

- Storing the embeddings into a vector database, such as Pinecone.

- Receiving a user query.

- Converting the user query into a vector embedding.

- Running a similarity search on the vector database index to identify relevant documents.

- Compressing or extracting only the related content from the documents to limit the amount of data sent to the LLM.

- Utilizing prompt engineering to create a prompt that combines the user query with the relevant documents retrieved from the vector database.

- Prompting the LLM with the prepared prompt.

- Responding back to the user with the answer generated by the LLM.

Each of these steps is crucial in the overall solution design, and they must be executed in a specific order to ensure optimal performance. By following this design and utilizing appropriate tools, we can effectively address our problem and generate accurate responses to user queries.

To simplify the process of implementing the solution design, we will use LangChain, which offers a set of modular abstractions for the various components required to achieve our design. LangChain also provides customized chains that are tailored to specific use-cases, such as Q&A, which enables efficient assembly and orchestration of these components.

By utilizing LangChain’s modular abstractions and customized chains, we can avoid writing the code for each individual step and instead focus on assembling and orchestrating these components using LangChain’s intuitive interface. This approach streamlines the solution design process and ensures optimal performance while minimizing the need for extensive coding knowledge.

The following diagram illustrates how we utilize LangChain and its components to orchestrate the extraction of content and setting up of the Pinecone vector database

Fig 2: Q&A Solution Setup using LangChain

The diagram below details the steps for Q&A.

Output

As you can see from the results of our in-context learning design, the output is significantly more accurate and includes references to the sources from which the information was obtained.

The chain we created, added relevant content from the Pinecone vector database and created the prompt as follows.

How did we achieve this?

Now, let’s discuss the code and the process we used to achieve these results. You can fork the code and tailor it to your own use case, and you may also want to explore other available vector databases.

https://engineering.peerislands.io/media/13695bd04ea6fff27e17c0c646fac169

For further exploration

I can think of improving the solution above in several ways

- Accelerating indexing using Ray, especially when you are dealing with large volumes of data.

- Run LLM locally to ensure complete privacy of content and interactions.

- Explore other vector databases and capabilities..

- Hosting the solution using Streamlit.

I hope you found this article informative and useful. I’m interested in hearing your feedback and thoughts on how we can further improve the solution, as well as your own ideas for experimentation.

Given the rapid pace of innovation in the AI space, I’m excited and curious to see what the future holds.

References

- Integrating ChatGPT with internal knowledge base and question-answer platform: is an excellent article that summarizes the concepts and how you can go about building a solution like this. My blog and solution are heavily influenced by this article.

- How does in-context learning work

- Solving a machine-learning mystery

- Zero-Shot Open-Book Question Answering

- Retrieval-Augmented Generation (RAG) for Knowledge-Intensive NLP Tasks

- Vector databases: https://learn.microsoft.com/en-us/semantic-kernel/concepts-ai/vectordb

- https://medium.com/@atmabodha/pre-training-fine-tuning-and-in-context-learning-in-large-language-models-llms-dd483707b122

- https://qdrant.tech/articles/langchain-integration/ was influential in my solution diagrams.

- Towards Understanding In-context Learning

- Contextual Compression

- Semantic Search